|

| 1 | +--- |

| 2 | +layout: post |

| 3 | +title: "MinPy: The NumPy Interface upon MXNet’s Backend" |

| 4 | +date: 2017-01-18 00.00.00 -0800 |

| 5 | +author: Larry Tang and Minjie Wang |

| 6 | +comments: true |

| 7 | +--- |

| 8 | + |

| 9 | +Machine learning is now enjoying its golden age. In the past few years, its effectiveness has been proved by solving many traditionally hard problems in computer vision and natural language processing. At the same time, different machine learning frameworks came out to justify different needs. These frameworks, fall generally into two different categories: symbolic programming and imperative programming. |

| 10 | + |

| 11 | +## Symbolic V.S. Imperative Programming |

| 12 | + |

| 13 | +Symbolic and imperative programing are two different programming models. Imperative programming are represented by TensorFlow, MXNet’s symbol system, and Theano etc. In symbolic programming model, the execution of a neural network is comprised of two steps. The graph of the computational model needs to be defined first, then the defined graph is sent to execution. For example: |

| 14 | + |

| 15 | +~~~python |

| 16 | +import numpy as np |

| 17 | +A = Variable('A') |

| 18 | +B = Variable('B') |

| 19 | +C = B * A |

| 20 | +D = C + Constant(1) |

| 21 | +# compiles the function |

| 22 | +f = compile(D) |

| 23 | +d = f(A=np.ones(10), B=np.ones(10)*2) |

| 24 | +~~~ |

| 25 | + |

| 26 | +(Example taken from [[1][]]) |

| 27 | + |

| 28 | +The core idea here is that the computational graph is defined beforehand, which means the definition and the execution are separated. The advantage of symbolic programming is that it has specified precise computational boundary. Therefore, it is easier to adopt deep optimizations. However, symbolic programming has its limitation. First, it cannot gracefully work with control dependency. Second, it is hard to master a new symbolic language for a newcomer. Third, since the description and the execution of the computational graph are separated, it is difficult to relate execution to value instantiation in the computation. |

| 29 | + |

| 30 | +So what about imperative programming? In fact most programmers have already known imperative programming quite well. The everyday C, Pascal, or Python code is almost all imperative. The fundamental idea is that every command is executed step by step, without a separated stage to define the computational graph. For example |

| 31 | + |

| 32 | +~~~python |

| 33 | +import numpy as np |

| 34 | +a = np.ones(10) |

| 35 | +b = np.ones(10) * 2 |

| 36 | +c = b * a |

| 37 | +d = c + 1 |

| 38 | +~~~ |

| 39 | + |

| 40 | +(Example taken from [[1][]]) |

| 41 | + |

| 42 | +[1]: http://mxnet.io/architecture/program_model.html |

| 43 | + |

| 44 | +When the code executes `c = b * a` and `d = c + 1`, they just run the actual computation. Compared to symbolic programming, imperative programming is much more flexible, since there is no separation of definition and execution. This is important for debugging and visualization. However, its computational boundary is not predefined, leading to harder system optimization. NumPy and Torch adapts imperative programming model. |

| 45 | + |

| 46 | +MXNet is a “mixed” framework that aims to provide both symbolic and imperative style, and leaves the choice to its users. While MXNet has a superb symbolic programming subsystem, its imperative subsystem is not powerful enough compared to other imperative frameworks such as NumPy and Torch. This leads to our goal: a fully functional imperative framework that focuses on flexibility without much performance loss, and works well with MXNet’s existing symbol system. |

| 47 | + |

| 48 | +## What is MinPy |

| 49 | + |

| 50 | +MinPy aims at providing a high performing and flexible deep learning platform, by prototyping a pure [NumPy](http://www.numpy.org/) interface above [MXNet](https://github.com/dmlc/mxnet) backend. In one word, you get the following automatically with your NumPy code: |

| 51 | + |

| 52 | +~~~python |

| 53 | +import minpy.numpy as np |

| 54 | +~~~ |

| 55 | + |

| 56 | +- Operators with GPU support will be ran on GPU. |

| 57 | +- Graceful fallback for missing operations to NumPy on CPU. |

| 58 | +- Automatic gradient generation with Autograd support. |

| 59 | +- Seamless MXNet symbol integration. |

| 60 | + |

| 61 | +## Pure NumPy, purely imperative |

| 62 | + |

| 63 | +Why obsessed with NumPy interface? First of all, NumPy is an extension to the Python programming language, with support for large, multi-dimensional arrays, matrices, and a large library of high-level mathematical functions to operate on these abstractions. If you just begin to learn deep learning, you should absolutely start from NumPy to gain a firm grasp of its concepts (see, for example, the Stanford's [CS231n course](http://cs231n.stanford.edu/syllabus.html)). For quick prototyping of advanced deep learning algorithms, you may often start composing with NumPy as well. |

| 64 | + |

| 65 | +Second, as an extension of Python, your implementation follows the intuitive imperative style. This is the **only** style, and there is **no** new syntax constructs to learn. To have a taste of this, let's look at some examples below. |

| 66 | + |

| 67 | +## Printing and Debugging |

| 68 | + |

| 69 | + |

| 70 | + |

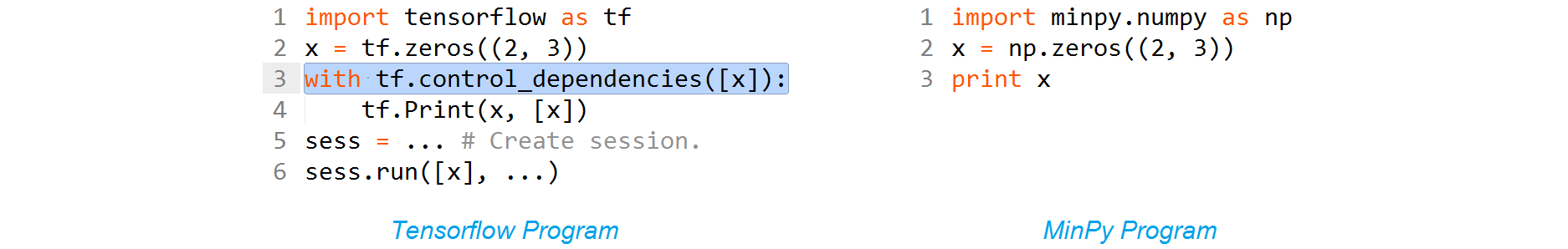

| 71 | +In symbolic programming, the control dependency before the print statement is required, otherwise the print operator will not appear on the critical dependency path and thus not being executed. In contrast, MinPy is simply NumPy, as straightforward as Python's hello world. |

| 72 | + |

| 73 | +## Data-dependent branches |

| 74 | + |

| 75 | + |

| 76 | + |

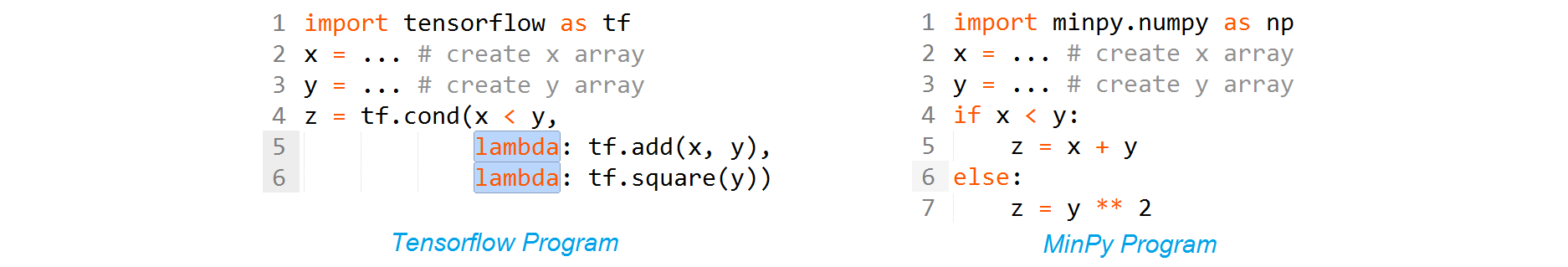

| 77 | +In symbolic programming, the `lambda` is required in each branch to lazily expand the dataflow graph during runtime, which can be quite confusing. Again, MinPy is NumPy, and you freely use the if statement anyway you like. |

| 78 | + |

| 79 | +Tensorflow is just one typical example, many other packages (e.g. Theano, or even MXNet) have similar problems. The underlying reason is the trade-off between *symbolic programming* and *imperative programming*. Code in symbolic programs (Tensorflow and Theano) generates dataflow graph instead of performing concrete computation. This enables extensive optimizations, but requires reinventing almost all language constructs (like if and loop). Imperative programs (NumPy) generates dataflow graph *along with* the computation, enabling you freely query or use the value just computed. |

| 80 | +In MinPy, we use NumPy syntax to ease your programming, while simultaneously achieving good performance. |

| 81 | + |

| 82 | +## Dynamic automatic gradient computation |

| 83 | + |

| 84 | +Automatic gradient computation has become essential in modern deep learning systems. In MinPy, we adopt [Autograd](https://github.com/HIPS/autograd)'s approach to compute gradients. Since the dataflow graph is generated along with the computation, all kinds of native control flow are supported during gradient computation. For example: |

| 85 | + |

| 86 | +~~~python |

| 87 | +import minpy |

| 88 | +from minpy.core import grad |

| 89 | + |

| 90 | +def foo(x): |

| 91 | + if x >= 0: |

| 92 | + return x |

| 93 | + else: |

| 94 | + return 2 * x |

| 95 | + |

| 96 | +foo_grad = grad(foo) |

| 97 | +print foo_grad(3) # should print 1.0 |

| 98 | +print foo_grad(-1) # should print 2.0 |

| 99 | +~~~ |

| 100 | + |

| 101 | +Here, feel free to use native `if` statement. A complete tutorial about auto-gradient computation can be found [here](https://minpy.readthedocs.io/en/latest/tutorial/autograd_tutorial.html). |

| 102 | + |

| 103 | +## Elegant fallback for missing operators |

| 104 | + |

| 105 | +You never like `NotImplementedError`, so do we. NumPy is a very large library. In MinPy, we automatically fallback to NumPy if some operators have not been implemented in MXNet yet. For example, the following code runs smoothly and you don't need to worry about copying arrays back and forth from GPU to CPU; MinPy handles the fallback and its side effect transparently. |

| 106 | + |

| 107 | +~~~python |

| 108 | +import minpy.numpy as np |

| 109 | +x = np.zeros((2, 3)) # Use MXNet GPU implementation |

| 110 | +y = np.ones((2, 3)) # Use MXNet GPU implementation |

| 111 | +z = np.logaddexp(x, y) # Use NumPy CPU implementation |

| 112 | +~~~ |

| 113 | + |

| 114 | +## Seamless MXNet symbol support |

| 115 | + |

| 116 | +Although we pick the imperative side, we understand that symbolic programming is necessary for operators like convolution. Therefore, MinPy allows you to "wrap" a symbol into a function that could be called together with other imperative calls. From a programmer's eye, these functions is just as other NumPy calls, thus we preserve the imperative style throughout: |

| 117 | + |

| 118 | +~~~python |

| 119 | +import mxnet as mx |

| 120 | +import minpy.numpy as np |

| 121 | +from minpy.core import Function |

| 122 | +# Create Function from symbol. |

| 123 | +net = mx.sym.Variable('x') |

| 124 | +net = mx.sym.Convolution(net, name='conv', kernel=(3, 3), num_filter=32, no_bias=True) |

| 125 | +conv = Function(net, input_shapes={'x', (8, 3, 10, 10)} |

| 126 | +# Call Function as normal function. |

| 127 | +x = np.zeros((8, 3, 10, 10)) |

| 128 | +w = np.ones((32, 3, 3, 3,)) |

| 129 | +y = np.exp(conv(x=x, conv_weight=w)) |

| 130 | +~~~ |

| 131 | + |

| 132 | +## Is MinPy fast? |

| 133 | + |

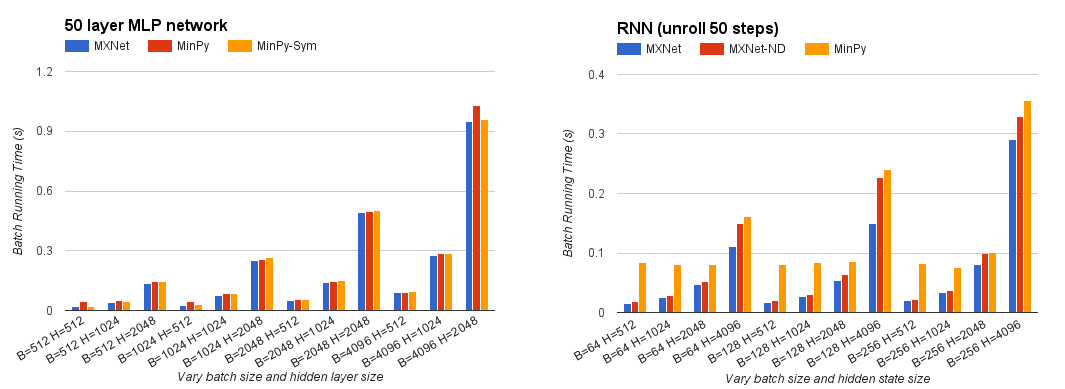

| 134 | +The imperative interface does raise many challenges, especially it foregoes some of the deep optimization that only (currently) embodied in symbolic programming. However, MinPy manages to retain reasonable performance, especially when the actual computation is intense. Our next target is to get back the performance with advanced system techniques. |

| 135 | + |

| 136 | + |

| 137 | + |

| 138 | +## CS231n -- a Perfect Intro for Newcomers |

| 139 | + |

| 140 | +As for deep learning learners, MinPy is a perfect tool to begin with. One of the reasons is that MinPy is fully compatible with NumPy, which means almost no modification to the existing NumPy code. In addition, our team also provides a modified version of CS231n assignments to address the amenity of MinPy. |

| 141 | + |

| 142 | +[CS231n](http://cs231n.stanford.edu/) is an introductory course to deep learning taught by Professor [Fei-Fei Li](http://vision.stanford.edu/feifeili/) and her Ph.D students [Andrej Karpathy](http://cs.stanford.edu/people/karpathy/) and [Justin Johnson](http://cs.stanford.edu/people/jcjohns/) at Stanford University. The curriculum covers all the basics of deep learning, including convolutional neural network and recurrent neural network. The course assignments are not only a simple practice of the lecture contents, but a in-depth journey for students to explore deep learning’s latest applications step-by-step. Since MinPy has similar interface with NumPy, our team modified assignments in CS231n to demonstrate the features MinPy provided, in order to create an integrated learning experience for the students who learn deep learning for their first time. The MinPy version of CS231n has already been used in deep learning course at Shanghai Jiao Tong University and ShanghaiTech University. |

| 143 | + |

| 144 | +## Summary |

| 145 | + |

| 146 | +MinPy development team began with [Minerva](https://github.com/dmlc/minerva) project. After co-founded MXNet project with the community, we have contributed to the core code of MXNet, including its executor engine, IO, and Caffe operator plugin. Having completed that part, the team decided to take a step back and rethink the user experience, before moving to yet another ambitious stage of performance optimizations. We strive to provide maximum flexibility for users, while creating space for more advanced system optimization. MinPy is pure NumPy and purely imperative. It will be merged into MXNet in the near future. |

| 147 | + |

| 148 | +Enjoy! Please send us feedbacks. |

| 149 | + |

| 150 | +## Links |

| 151 | + |

| 152 | +* GitHub: <https://github.com/dmlc/minpy> |

| 153 | +* MinPy documentation: <http://minpy.readthedocs.io/en/latest/> |

| 154 | + |

| 155 | +## Acknowledgements |

| 156 | + |

| 157 | +- [MXNet Community](http://dmlc.ml/) |

| 158 | +- Sean Welleck (Ph.D at NYU), Alex Gai and Murphy Li (Undergrads at NYU Shanghai) |

| 159 | +- Professor [Yi Ma](http://sist.shanghaitech.edu.cn/StaffDetail.asp?id=387) and Postdoc Xu Zhou at ShanghaiTech University; Professor [Kai Yu](https://speechlab.sjtu.edu.cn/~kyu/) and Professor [Weinan Zhang](http://wnzhang.net/) at Shanghai Jiao Tong University. |

| 160 | + |

| 161 | +## MinPy Developers |

| 162 | + |

| 163 | +- Project Lead Minjie Wang (NYU) [GitHub](https://github.com/jermainewang) |

| 164 | +- Larry Tang (U of Michigan*) [GitHub](https://github.com/lryta) |

| 165 | +- Yutian Li (Stanford) [GitHub](https://github.com/hotpxl) |

| 166 | +- Haoran Wang (CMU) [GitHub](https://github.com/HrWangChengdu) |

| 167 | +- Tianjun Xiao (Tesla) [GitHub](https://github.com/sneakerkg) |

| 168 | +- Ziheng Jiang (Fudan U*) [GitHub](https://github.com/ZihengJiang) |

| 169 | +- Professor [Zheng Zhang](https://shanghai.nyu.edu/academics/faculty/directory/zheng-zhang) (NYU Shanghai) [GitHub](https://github.com/zzhang-cn) |

| 170 | + |

| 171 | +*: MinPy is completed during NYU Shanghai research internship |

| 172 | + |

| 173 | +## Reference |

| 174 | +1. <http://mxnet.io/architecture/program_model.html> |

| 175 | + |

| 176 | + |

| 177 | + |

| 178 | + |

0 commit comments