-

Notifications

You must be signed in to change notification settings - Fork 1.3k

Description

Kubernetes plugin may leak thread pool executor overtime like the following:

"-109914906-pool-56-thread-10" id=6547 state=TIMED_WAITING - waiting on <0x7a4dbe2c> (a java.util.concurrent.SynchronousQueue$Transferer) - locked <0x7a4dbe2c> (a java.util.concurrent.SynchronousQueue$Transferer) at java.base@21.0.8/jdk.internal.misc.Unsafe.park(Native Method) at java.base@21.0.8/java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:410) at java.base@21.0.8/java.util.concurrent.LinkedTransferQueue$DualNode.await(LinkedTransferQueue.java:452) at java.base@21.0.8/java.util.concurrent.SynchronousQueue$Transferer.xferLifo(SynchronousQueue.java:194) at java.base@21.0.8/java.util.concurrent.SynchronousQueue.xfer(SynchronousQueue.java:235) at java.base@21.0.8/java.util.concurrent.SynchronousQueue.poll(SynchronousQueue.java:338) at java.base@21.0.8/java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1069) at java.base@21.0.8/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1130) at java.base@21.0.8/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) at java.base@21.0.8/java.lang.Thread.runWith(Thread.java:1596) at java.base@21.0.8/java.lang.Thread.run(Thread.java:1583)

The rate at which this happens is unclear. The impact depends on the activity and the number of Kubernetes Cloud configured. And seems related to the expiration of Cached clients that does not cleanup resources. More details below.

How to Reproduce

- Spin Up Jenkins

- Configure a Kubernetes Cloud with a fake credentials ( credentials that doe snot work and will make the kubernetes client fail)

- Create one template in that Kubernetes Cloud

- Create a Pipeline that request that Pod template

- Build the pipeline

--> The pipeline will be stuck in queue and Kubernetes plugin will keep on try to spin up and agent and fail. About every 10 minutes, your will notice a leaked thread.

Workaround

If the environment allow it, the cache expiry time can be increased to one hour org.csanchez.jenkins.plugins.kubernetes.clients.cacheExpiration=3600 or more. Whether this is suitable depends on the environment.

Notes

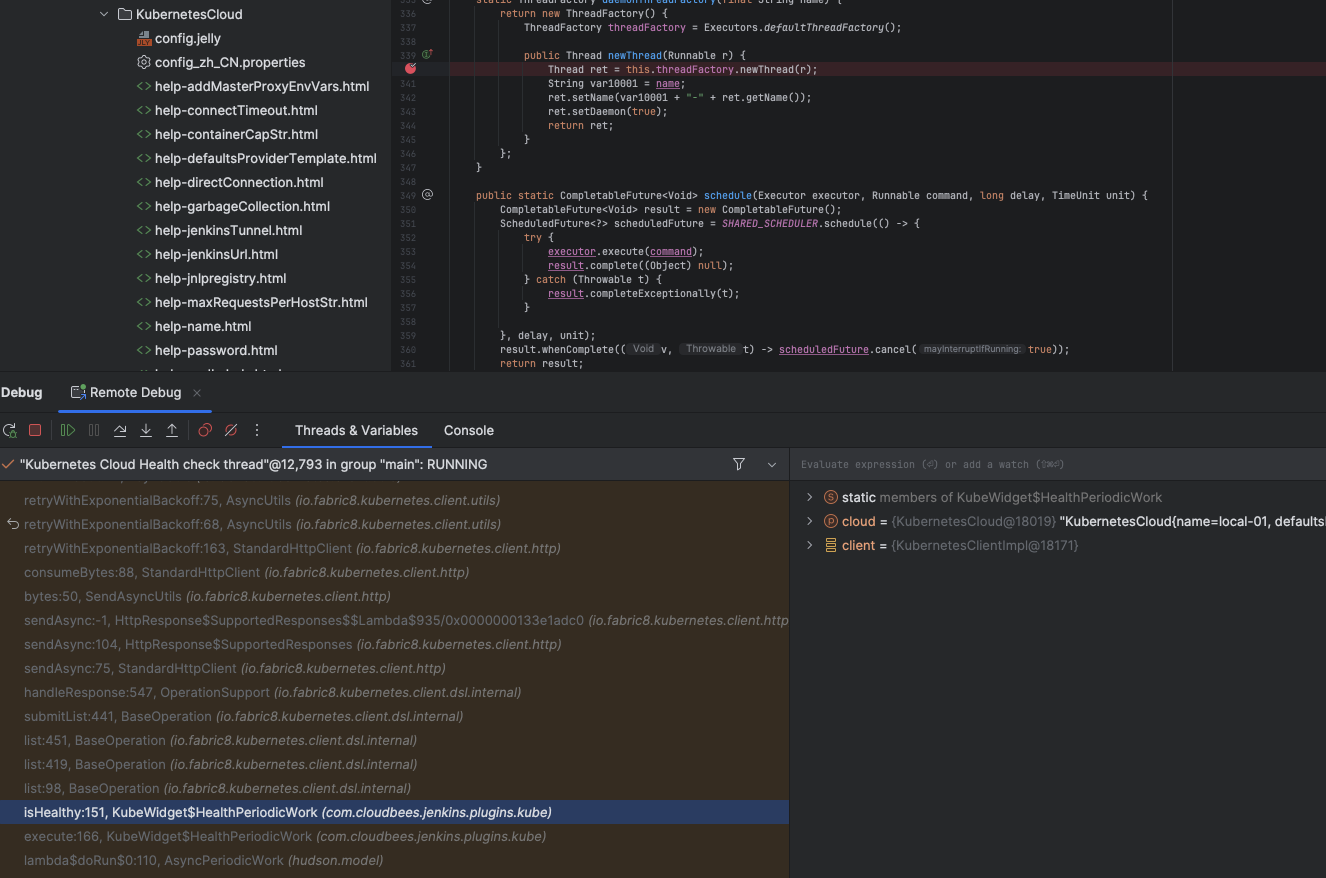

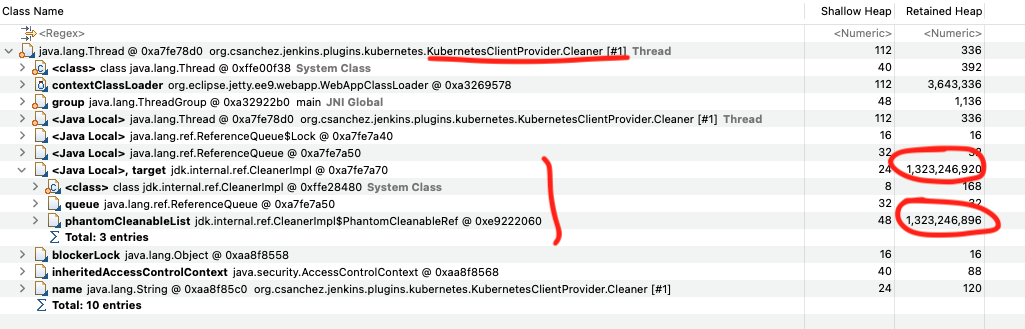

With a heapdump, it was evident that the ThreadFactory that generated the thread is the one

The first term in the prefixed name is empty because it originates from an anonymous class in BaseClient:

Noticing that with a simple 1 Kubernetes Cloud scenario, one thread is leaked every 10 minutes, I narrowed this down to be linked to the management of cached clients in the KubernetesClientProvider that expires Kubernetes clients after 10 minutes without explicitly closing them

Originally reported by  allan_burdajewicz, imported from: Kubernetes client leaks Thread Pool Executors

allan_burdajewicz, imported from: Kubernetes client leaks Thread Pool Executors

- assignee:

allan_burdajewicz

allan_burdajewicz

- status: Open

- priority: Major

- component(s): kubernetes-plugin

- resolution: Unresolved

- votes: 0

- watchers: 4

- imported: 2025-12-02

Raw content of original issue

Kubernetes plugin may leak thread pool executor overtime like the following:

"-109914906-pool-56-thread-10" id=6547 state=TIMED_WAITING - waiting on <0x7a4dbe2c> (a java.util.concurrent.SynchronousQueue$Transferer) - locked <0x7a4dbe2c> (a java.util.concurrent.SynchronousQueue$Transferer) at [email protected]/jdk.internal.misc.Unsafe.park(Native Method) at [email protected]/java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:410) at [email protected]/java.util.concurrent.LinkedTransferQueue$DualNode.await(LinkedTransferQueue.java:452) at [email protected]/java.util.concurrent.SynchronousQueue$Transferer.xferLifo(SynchronousQueue.java:194) at [email protected]/java.util.concurrent.SynchronousQueue.xfer(SynchronousQueue.java:235) at [email protected]/java.util.concurrent.SynchronousQueue.poll(SynchronousQueue.java:338) at [email protected]/java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1069) at [email protected]/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1130) at [email protected]/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) at [email protected]/java.lang.Thread.runWith(Thread.java:1596) at [email protected]/java.lang.Thread.run(Thread.java:1583)The rate at which this happens is unclear. The impact depends on the activity and the number of Kubernetes Cloud configured. And seems related to the expiration of Cached clients that does not cleanup resources. More details below.

How to Reproduce

- Spin Up Jenkins

- Configure a Kubernetes Cloud with a fake credentials ( credentials that doe snot work and will make the kubernetes client fail)

- Create one template in that Kubernetes Cloud

- Create a Pipeline that request that Pod template

- Build the pipeline

--> The pipeline will be stuck in queue and Kubernetes plugin will keep on try to spin up and agent and fail. About every 10 minutes, your will notice a leaked thread.

Workaround

If the environment allow it, the cache expiry time can be increased to one hour org.csanchez.jenkins.plugins.kubernetes.clients.cacheExpiration=3600 or more. Whether this is suitable depends on the environment.

Notes

With a heapdump, it was evident that the ThreadFactory that generated the thread is the one

The first term in the prefixed name is empty because it originates from an anonymous class in BaseClient:

Noticing that with a simple 1 Kubernetes Cloud scenario, one thread is leaked every 10 minutes, I narrowed this down to be linked to the management of cached clients in the KubernetesClientProvider that expires Kubernetes clients after 10 minutes without explicitly closing them

- environment:

kubernetes:4371.vb_33b_086d54a_1