A modern template for agentic orchestration — built for rapid iteration and scalable deployment using highly customizable, community-supported tools like MCP, LangGraph, and more.

Note

Read the docs with demo videos here. This repo will not contain demo videos.

- FastAPI MCP LangGraph Template

- Seamlessly integrates LLM with growing list of community integrations found here

- No LLM provider lock in

- Native streaming for UX in complex Agentic Workflows

- Native persisted chat history and state management

for Python backend API

for Python SQL database interactions (ORM + Validation).

for LLM Observability and LLM Metrics

for Data Validation and Settings Management.

for DB RBAC

Reverse Proxy

for development and production.

for scraping Metrics

for visualizing Metrics

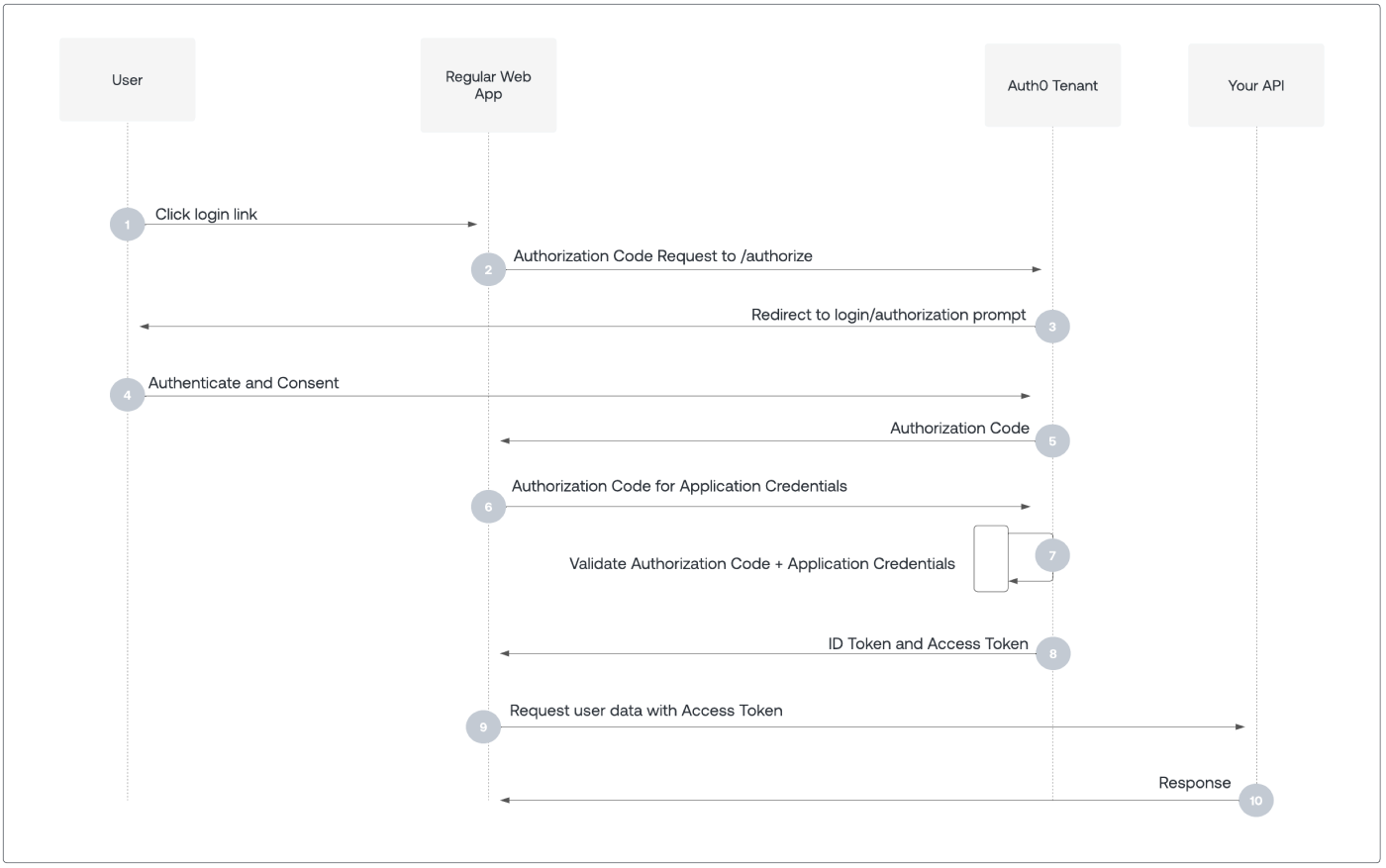

SaaS for Authentication and Authorization with OIDC & JWT via OAuth 2.0

- CI/CD via Github Actions

This section outlines the architecture of the services, their interactions, and planned features.

Inspector communicates via SSE protocol with each MCP Server, while each server adheres to MCP specification.

graph LR

subgraph localhost

A[Inspector]

B[DBHub Server]

C[Youtube Server]

D[Custom Server]

end

subgraph Supabase Cloud

E[Supabase DB]

end

subgraph Google Cloud

F[Youtube API]

end

A<-->|Protocol|B

A<-->|Protocol|C

A<-->|Protocol|D

B<-->E

C<-->F

The current template does not connect to all MCP servers. Additionally, the API server communicates with the database using a SQL ORM.

graph LR

subgraph localhost

A[API Server]

B[DBHub Server]

C[Youtube Server]

D[Custom Server]

end

subgraph Supabase Cloud

E[Supabase DB]

end

A<-->|Protocol|D

A<-->E

Can be extended for other services like Frontend and/or certain backend services self-hosted instead of on cloud (e.g., Langfuse).

graph LR

A[Web Browser]

subgraph localhost

B[Nginx Reverse Proxy]

C[API Server]

end

A-->B

B-->C

graph LR

subgraph localhost

A[API Server]

end

subgraph Grafana Cloud

B[Grafana]

end

subgraph Langfuse Cloud

C[Langfuse]

end

A -->|Metrics & Logs| B

A -->|Traces & Events| C

Setup to run the repository in both production and development environments.

Build community youtube MCP image with:

./community/youtube/build.sh:::tip

Instead of cloning or submoduling the repository locally, then building the image, this script builds the Docker image inside a temporary Docker-in-Docker container. This approach avoids polluting your local environment with throwaway files by cleaning up everything once the container exits.

:::

Then build the other images with:

docker compose -f compose-dev.yaml buildCopy environment file:

cp .env.sample .envAdd your following API keys and value to the respective file: ./envs/backend.env, ./envs/youtube.env and .env.

OPENAI_API_KEY=sk-proj-...

POSTGRES_DSN=postgresql://postgres...

LANGFUSE_PUBLIC_KEY=pk-lf-...

LANGFUSE_SECRET_KEY=sk-lf-...

LANGFUSE_HOST=https://cloud.langfuse.com

ENVIRONMENT=production

YOUTUBE_API_KEY=...Set environment variables in shell: (compatible with bash and zsh)

set -a; for env_file in ./envs/*; do source $env_file; done; set +aStart production containers:

docker compose up -dFirst, set environment variables as per above.

:::warning

Only replace the following if you plan to start debugger for FastAPI server in VSCode.

:::

Replace ./compose-dev.yaml entrypoint to allow debugging FastAPI server:

api:

image: api:prod

build:

dockerfile: ./backend/api/Dockerfile

# highlight-next-line

entrypoint: bash -c "sleep infinity"

env_file:

- ./envs/backend.envThen:

code --no-sandbox .Press F1 and type Dev Containers: Rebuild and Reopen in Container to open containerized environment with IntelliSense and Debugger for FastAPI.

Run development environment with:

docker compose -f compose-dev.yaml up -dSometimes in development, nginx reverse proxy needs to reload its config to route services properly.

docker compose -f compose-dev.yaml exec nginx sh -c "nginx -s reload"The following markdown files provide additional details on other features:

Note

Click above to view live update on star history as per their article: Ongoing Broken Live Chart you can still use this website to view and download charts (though you may need to provide your own token).