-

Notifications

You must be signed in to change notification settings - Fork 0

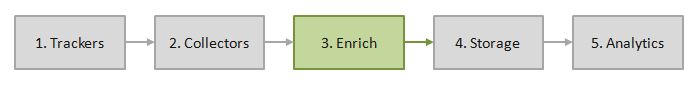

Enrichment

HOME > [SNOWPLOW TECHNICAL DOCUMENTATION](Snowplow technical documentation) > Enrichment > Enrichment

The Snowplow Enrichment step takes the raw log files generated by the Snowplow collectors, tidies the data up and enriches it so that it is:

- Ready in S3 to be analysed using EMR

- Ready to be uploaded into Amazon Redshift, PostgreSQL or some other alternative storage mechanism for analysis

The Enrichment process is written using Scalding, a Scala implementation of Cascading, an ETL library that's written on top of Hadoop. Historically, we have referred to the Enrichment Process as the Hadoop-ETL, to distinguish it from the Hive-based ETL that preceded it. The Hive ETL has since been deprecated.

Snowplow uses Amazon's EMR to run the Enrichment process. The regular running of the process (which is necessary to ensure that up-to-date Snowplow data is available for analysis) is managed by EmrEtlRunner, a Ruby application.

In this guide, we cover:

- How the EmrEtlRunner instruments the regular running of the Enrichment Process

- [The Enrichment Process itself][The-enrichment-process]

Home | About | Project | Setup Guide | Technical Docs | Copyright © 2012-2013 Snowplow Analytics Ltd

HOME > [TECHNICAL DOCUMENTATION](Snowplow technical documentation)

1. Trackers

Overview

Javascript Tracker

No-JS Tracker

Lua Tracker

Arduino Tracker

2. Collectors

Overview

Cloudfront collector

Clojure collector (Elastic Beanstalk)

SnowCannon (node.js)

3. Enrich

Overview

EmrEtlRunner

Scalding-based Enrichment Process

C. Canonical Snowplow event model

4. Storage

Overview

[Storage in S3](S3 storage)

Storage in Redshift

Storage in PostgreSQL

Storage in Infobright (deprecated)

The StorageLoader

D. Snowplow storage formats (to write)

5. Analytics

Analytics documentation

Common

Artifact repositories